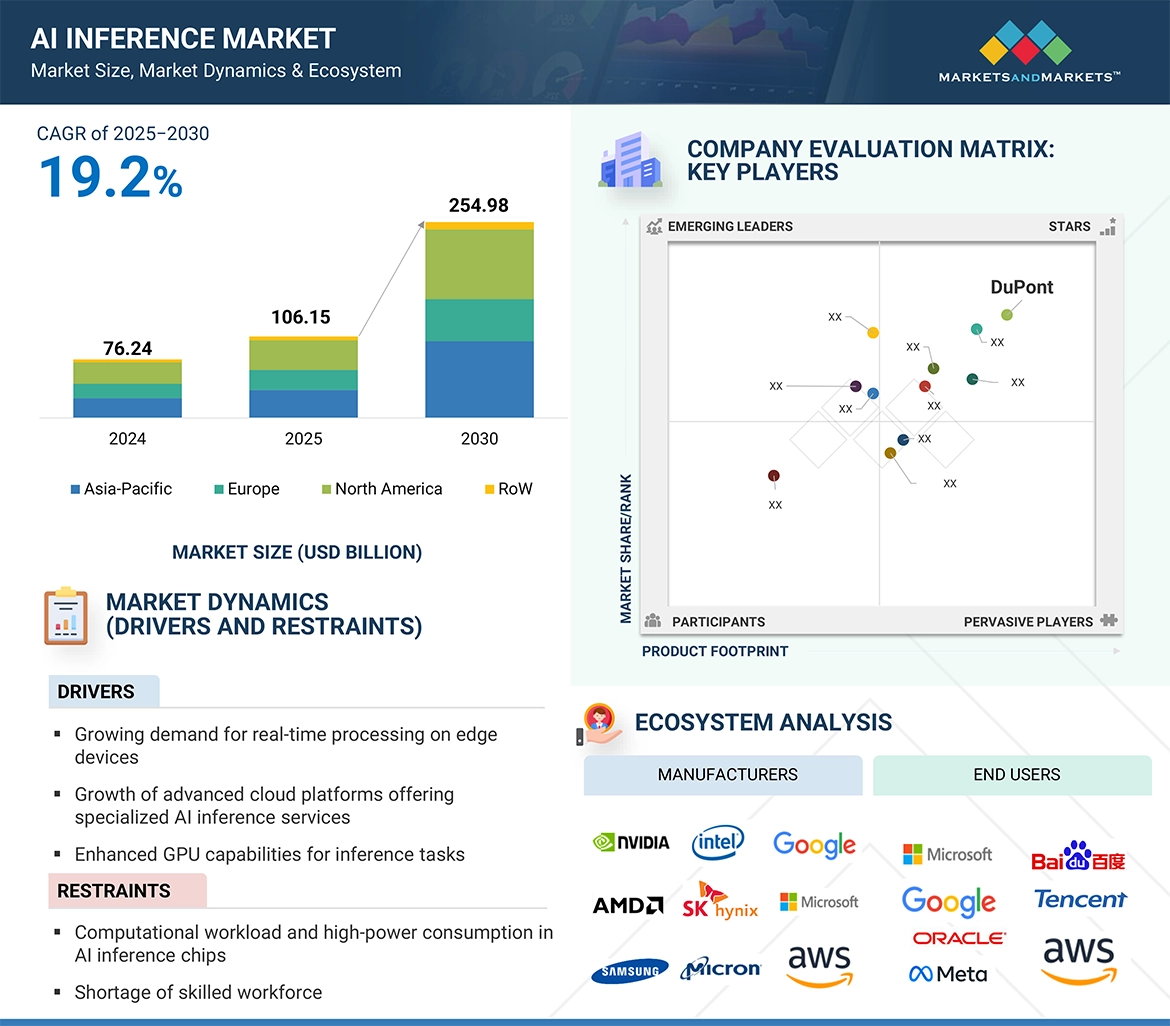

The report “AI Inference Market by Compute (GPU, CPU, FPGA), Memory (DDR, HBM), Network (NIC/Network Adapters, Interconnect), Deployment (On-premises, Cloud, Edge), Application (Generative AI, Machine Learning, NLP, Computer Vision) – Global Forecast to 2030” The AI Inference market is expected to grow from USD 106.15 billion in 2025 and is estimated to reach USD 254.98 billion by 2030; it is expected to grow at a Compound Annual Growth Rate (CAGR) of 19.2% from 2025 to 2030.

Download PDF Brochure @ https://www.marketsandmarkets.com/pdfdownloadNew.asp?id=189921964

Browse 322 market data Tables and 85 Figures spread through 365 Pages and in-depth TOC on “AI Inference Market”

View detailed Table of Content here – https://www.marketsandmarkets.com/Market-Reports/ai-inference-market-189921964.html

The AI inference market is growing due to the surge in generative AI and large language models (LLMs), which require robust inference capabilities for real-time applications like chatbots and content creation. Increasing data volumes and the need for cost-effective, energy-efficient solutions are pushing innovation in AI inference chips. Furthermore, the rise of 5G networks enables faster data transmission, supporting real-time AI inference in smart cities, autonomous vehicles, and industrial automation, creating new opportunities for market expansion.

By Network, NIC/Network Adapters segment is projected to have highest market share and highest CAGR during the forecast period.

The NIC (Network Interface Card)/ network adapters is expected to have the highest market share and will experience the highest CAGR over the forecast period. The demand for this segment is growing due to the high requirement for high-speed, low-latency data transfer in AI-based environments. As AI workloads, especially in data centers and cloud computing, increase and become more data-intensive, the demand for network infrastructure that is efficient to handle rapid data movement between Ai inference chips and distributed systems is growing. NIC/Network adapters allow AI systems to work with large datasets in real time and enable faster inference of AI models. For instance, Intel Corporation (US) released Gaudi 3 enterprise AI accelerator, which provides ethernet networking in April 2024. It allows scalability for businesses that offer training, inference, and fine-tuning. The company also introduced AI-optimized ethernet solutions, incorporating an AI NIC and AI connectivity chips, through the Ultra Ethernet Consortium. In addition, SmartNICS advancements which offload tasks such as encryption, compression, and data processing from CPUs, improve the efficiency and scalability of AI inference further. Their cost effectiveness and the ability to integrate with the existing infrastructures, makes NICs a cornerstone in AI networking

By Application, Generative AI segment will account for the highest CAGR during the forecast period.

Generative AI is expected to grow rapidly in the AI Inference Industry because of its transformative capabilities, increased computational efficiency and enhanced accessibility. Companies like NVIDIA Corporation and Advanced Micro Devices, Inc. are developing specialized GPUs with enhanced tensor cores optimized for the parallel processing demands of Generative AI. NVIDIA recently introduced generative AI microservices in March 2024, allowing developers to create and deploy AI copilots across the massive installed base of CUDA-enabled GPUs. These microservices integrated into NVIDIA AI Enterprise 5.0, accelerate inference tasks, retrieval-augmented generation (RAG) and the LLM customization, shortening deployment time from weeks to minutes. Major application providers such as Adobe, SAP, and CrowdStrike are leveraging these innovations highlighting the rising adoption of generative AI for real-time applications. Advancements in specialized hardwares like NVIDIA’s H100 GPUs and edge AI platforms like Jetson will further enable efficient and scalable deployment of generative models within industries like healthcare to cybersecurity. Such advancements show that more reliance is placed on generative AI in terms of innovation and operational efficiency, thereby growing the AI inference market rapidly.

By End User-Enterprises segment in AI inference market will account for the high CAGR in 2025-2030

The enterprises segment will have the highest growth rate in the AI Inference market. Enterprises have widely adopted AI solutions for better operational efficiency, offer personalized customer experience and to drive innovation. Enterprises have resources and infrastructure to deploy large-scale AI models in domains such as customer service, supply chain optimization, and predictive analytics. Healthcare enterprise use AI for medical imaging and diagnostics, financial organizations for fraud and risk detection, and retailer for AI-based recommendation system and inventory management. This growth is further propelled by rise in advancements in enterprise-focused AI platforms that simplify the deployment and scale AI applications. For instance, In May 2024, Nutanix (US) collaborated with NVIDIA Corporation (US) in order to boost adoption for generative AI. This integration of Nutanix’s GPT-in-a-Box 2.0 with NVIDIA’S NIM inference microservices will enable enterprises to deploy scalable, secure, and high-performance GenAI applications both centrally and at the edge. With its platform, Nutanix simplifies the deployment of AI models and reduces the need for specialized Ai expertise and empowers businesses to implement AI strategies. These innovations highlight the increasing pace at which enterprises are investing in AI inference for competitive advantages and operational improvement.

North America region will hold highest share in the AI Inference market

North America is projected to account for the largest market share in the AI inference market during the forecast period. The growth in this region is majorly driven by the strong presence of leading technology companies and cloud providers, such as NVIDIA Corporation (US), Intel Corporation (US), Oracle Corporation (US), Micron Technology, Inc (US), Google (US), and IBM (US) which are heavily investing in advanced AI inference technologies. These companies are building state-of-the-art data centers equipped with AI processors, GPUs, and other required hardware to meet the ever-increasing demand for AI applications across industries. In addition, the governments of this region are emphasizing efforts toward improving AI inference capabilities. For instance, in September 2023, the US Department of State announced initiatives for the advancement of AI partnering with eight companies, including Google (US), Amazon (US), Anthropic PBC (US), Microsoft (US), Meta (US), NVIDIA Corporation (US), IBM (US), and OpenAI (US). They planned to invest more than USD 100 million for infrastructure required for AI deployment, particularly in cloud computing, data centres and AI hardware. These investments promote innovation and collaboration between the public and private sectors, boosting North America’s leadership in AI inference technologies.

Key Players

Key Players operating in the AI Inference Companies are NVIDIA Corporation (US), Advanced Micro Devices, Inc. (US), Intel Corporation (US), SK HYNIX INC. (South Korea), SAMSUNG (South Korea), Micron Technology, Inc. (US), Apple Inc. (US), Qualcomm Technologies, Inc. (US), Huawei Technologies Co., Ltd. (China), Google (US), Amazon Web Services, Inc. (US), Tesla (US), Microsoft (US), Meta (US), T-Head (China), Graphcore (UK), Cerebras (US), among others.

About MarketsandMarkets™

MarketsandMarkets™ has been recognized as one of America’s Best Management Consulting Firms by Forbes, as per their recent report.

MarketsandMarkets™ is a blue ocean alternative in growth consulting and program management, leveraging a man-machine offering to drive supernormal growth for progressive organizations in the B2B space. With the widest lens on emerging technologies, we are proficient in co-creating supernormal growth for clients across the globe.

Today, 80% of Fortune 2000 companies rely on MarketsandMarkets, and 90 of the top 100 companies in each sector trust us to accelerate their revenue growth. With a global clientele of over 13,000 organizations, we help businesses thrive in a disruptive ecosystem.

The B2B economy is witnessing the emergence of $25 trillion in new revenue streams that are replacing existing ones within this decade. We work with clients on growth programs, helping them monetize this $25 trillion opportunity through our service lines – TAM Expansion, Go-to-Market (GTM) Strategy to Execution, Market Share Gain, Account Enablement, and Thought Leadership Marketing.

Built on the ‘GIVE Growth’ principle, we collaborate with several Forbes Global 2000 B2B companies to keep them future-ready. Our insights and strategies are powered by industry experts, cutting-edge AI, and our Market Intelligence Cloud, KnowledgeStore™, which integrates research and provides ecosystem-wide visibility into revenue shifts.

In addition, MarketsandMarkets SalesIQ enables sales teams to identify high-priority accounts and uncover hidden opportunities, helping them build more pipeline and win more deals with precision.

To find out more, visit www.MarketsandMarkets™.com or follow us on Twitter , LinkedIn and Facebook.

Media Contact

Company Name: MarketsandMarkets™ Research Private Ltd.

Contact Person: Mr. Rohan Salgarkar

Email: Send Email

Phone: 18886006441

Address:1615 South Congress Ave. Suite 103, Delray Beach, FL 33445

City: Delray Beach

State: Florida

Country: United States

Website: https://www.marketsandmarkets.com/Market-Reports/ai-inference-market-189921964.html